Artificial Intelligence (AI) promises transformative benefits for enterprises, from smarter decision-making to enhanced customer experiences. Yet, as a CTO, you know the reality: while many companies eagerly experiment with AI pilots, very few successfully scale them into enterprise-grade systems. This journey from a promising proof-of-concept to a production-ready system is the single greatest hurdle in today’s AI landscape. This guide explores how embracing a cloud-native infrastructure and robust MLOps practices can help you bridge that gap, ensuring AI delivers tangible business value and a measurable return on investment.

The High Stakes of AI Pilots: A Sobering Reality Check

AI pilots are often launched with high expectations: a new predictive model for customer churn, an automation tool for supply chain management, or a recommendation engine for a new product line. But the truth is, the majority of these initiatives fail to deliver. According to a recent report from MIT’s NANDA initiative, a shocking 95% of enterprise generative AI pilots are failing to deliver measurable business impact.

This isn’t just a number; it represents wasted resources, disillusioned teams, and a loss of competitive advantage.

The reasons for this high failure rate are multifaceted and a constant concern for IT leadership. They often include:

- Technical bottlenecks: Insufficient computing resources, incompatible legacy systems, and a lack of scalable deployment mechanisms.

- Organisational challenges: A lack of alignment between AI teams and business units, which can lead to models being built for the wrong problem.

- Data issues: Poor quality, inconsistent formats, or data trapped in organisational silos.

The consequence of these failures is far more than just a line item in a budget report. It can erode executive trust in AI initiatives and create a culture of risk aversion that stalls innovation for years. As a CTO, understanding these challenges in scaling AI initiatives beyond initial proof-of-concept stages is not just an operational task—it’s a strategic imperative.

Strategic Alignment: The CTO’s Role in Steering AI

Scaling AI is as much a business challenge as a technical one. Your most critical responsibility is ensuring that you are aligning AI initiatives with business goals. Without this, even the most technically impressive model is just a science project. As a McKinsey study noted, the biggest barrier to scaling AI is often a lack of clear leadership and strategic focus.

Successful alignment involves a three-step process:

- Start with the Business Problem: Move away from a “technology-first” mindset. Instead of asking, “What can we do with AI?”, ask, “What are our most pressing business needs?” This could be reducing operational costs by 20%, improving customer satisfaction scores by 15%, or decreasing fraud detection time by 50%. By framing AI projects around these tangible metrics, you can secure executive buy-in and demonstrate clear ROI.

- Define Clear Success Metrics: Your success metrics should move beyond a model’s technical accuracy. While a data scientist might celebrate a model with 95% precision, a business leader cares about its adoption rate, the operational efficiency it unlocks, or the revenue uplift it generates.

- Foster Cross-Functional Collaboration: Break down the silos between your data science, engineering, and business teams. Establish clear ownership and communication channels to ensure everyone is working towards a shared objective.

By anchoring AI projects in strategic goals, you prevent the common pitfall of “pilot purgatory,” where promising models fail to deliver enterprise-wide value.

Building a Cloud-Native Foundation for AI

A fundamental enabler of AI at scale is a cloud-native infrastructure. Cloud-native design principles—modularity, scalability, and resilience—allow AI systems to be flexible, adaptable, and production-ready. This approach is not a “nice-to-have”; it is the very backbone of a modern, scalable AI strategy.

The benefits for an AI-first organisation are profound:

- Scalability: A cloud-native infrastructure allows you to easily handle increasing workloads and model complexity without massive hardware investments. You can dynamically allocate GPUs for model training and scale down resources when they aren’t needed, optimising costs.

- Reliability: Ensure uptime and fault tolerance through container orchestration with tools like Kubernetes and a microservices-based architecture. If one component fails, the entire system doesn’t.

- Modularity: Build independent AI components that can be updated or replaced without disrupting the entire system, accelerating the pace of innovation.

Key tools for this transformation include:

- Kubernetes: The de facto standard for orchestrating containers, enabling you to manage compute resources efficiently and scale horizontally.

- Infrastructure-as-Code (IaC): Tools like Terraform or CloudFormation automate the provisioning and management of your cloud environment, ensuring consistency and reproducibility.

- Managed AI Services: Platforms like AWS SageMaker, Azure ML, and Google Vertex AI abstract away much of the infrastructure complexity, allowing your teams to focus on what they do best: building models.

Designing a cloud-native infrastructure is a strategic decision that lays the foundation for sustainable AI growth. It’s the difference between a one-off pilot and a scalable, repeatable AI factory.

Ready to move beyond pilots and build AI that drives real business value?

The MLOps Imperative: Operationalising AI at Scale

MLOps, or Machine Learning Operations, is the bridge that connects the experimental world of data science with the rigorous demands of production software. It introduces software engineering best practices to AI systems, enabling repeatable, reliable, and monitored model operations.

The core components of a robust MLOps pipeline are what truly enable you to start creating scalable AI infrastructure:

- Continuous Integration & Deployment (CI/CD): This goes beyond code. For AI, CI/CD pipelines automate the testing and deployment of not just the model’s code, but also the data and the model itself. A single change in a data pipeline or a model’s hyperparameters can trigger a complete re-training, testing, and deployment cycle.

- Model Versioning & Tracking: Ensure full reproducibility and auditability. Every model, dataset, and set of hyperparameters is meticulously tracked and versioned, making it easy to roll back to a previous, more stable version if something goes wrong.

- Monitoring & Retraining Pipelines: Models degrade over time. Data drift (the statistical properties of the data change) and concept drift (the relationship between the input and output variables changes) are inevitable. MLOps tools provide automated monitoring to detect this performance decay and trigger alerts or even an automated retraining cycle. This continuous feedback loop is what keeps your AI systems relevant and accurate in a dynamic business environment.

By implementing MLOps on a cloud-native infrastructure, organisations can create a seamless path from experimentation to production. This operational rigor is what separates companies with a few successful pilots from those who have AI truly woven into their business fabric.

A Critical Pillar: Data Governance and the Rise of the Feature Store

Data is the lifeblood of AI, and its management is a CTO-level concern. Without proper governance, even the most advanced scalable AI infrastructure can fail. A key component of modern data governance for AI is the Feature Store.

A feature store is a centralised repository that allows teams to discover, share, and manage curated data features for machine learning models. This solves a major problem: data scientists often spend up to 80% of their time on data preparation. A feature store standardises features, ensures consistency between training and production environments, and acts as a central source of truth, making your AI development more efficient and secure.

Key aspects of data governance for enterprise AI include:

- Data Quality: Ensure data is accurate, consistent, and complete through automated validation and cleansing pipelines.

- Accessibility & Security: Make data available to AI pipelines while adhering to strict security protocols, access controls, and encryption standards.

- Compliance: Implement policies for data privacy (e.g., GDPR, CCPA) and maintain audit trails for transparency and accountability.

A strong data governance framework complements MLOps and cloud-native infrastructure, making AI systems not just scalable, but also secure and trustworthy.

Building the Right Team and Culture

Technology alone is insufficient. Successfully scaling AI requires a culture of collaboration and a team with the right skill sets. As the leader of your IT organisation, you must:

- Upskill Your Existing Staff: Train data scientists and engineers in MLOps practices. The best teams have “T-shaped” individuals who combine deep data science knowledge with a broad understanding of software and cloud engineering.

- Foster Cross-Functional Collaboration: Establish clear ownership and communication channels between business and technical teams. AI can no longer be a separate department’s responsibility.

- Invest in Continuous Learning: The AI landscape evolves at a breakneck pace. Offer career growth, challenging projects, and learning opportunities to retain top AI talent.

A forward-looking strategy ensures that AI systems remain scalable, adaptable, and aligned with business goals.

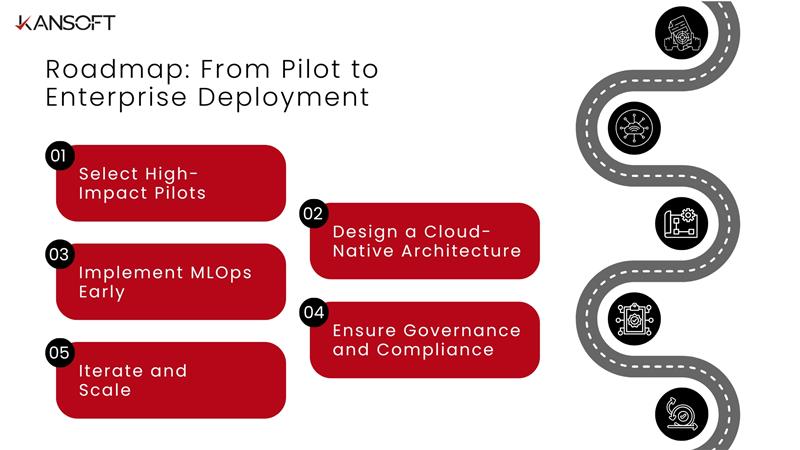

Roadmap: From Pilot to Enterprise Deployment

For a CTO, a structured approach is essential. A practical roadmap to overcome the challenges in scaling AI initiatives beyond initial proof-of-concept stages includes:

- Select High-Impact Pilots: Prioritise projects that are small in scope but directly tied to a major business metric.

- Design a Cloud-Native Architecture: Build your infrastructure with scalability and resilience in mind from day one.

- Implement MLOps Early: Don’t wait until a pilot is “successful” to build your pipelines. Automate as much as possible from the start.

- Ensure Governance and Compliance: Proactively protect data and maintain auditability to build trust within the organisation.

- Iterate and Scale: Gradually expand successful pilots, applying lessons learned and continuously improving your pipelines.

By following this roadmap, enterprises can avoid common pitfalls and maximise the ROI of their AI investments.

Ready to build a production-ready AI strategy?